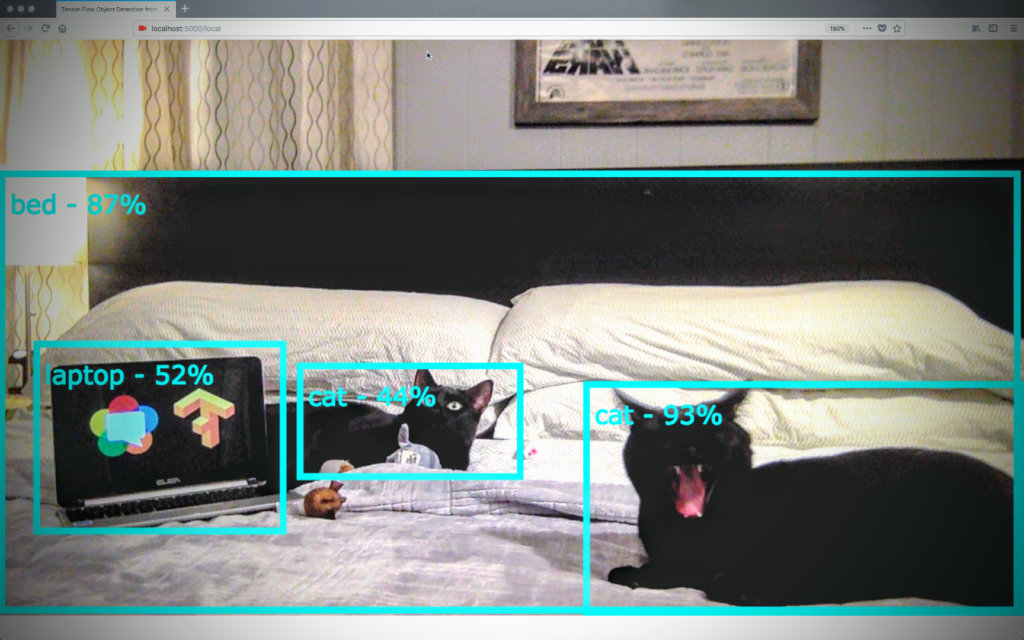

WebRTC is gaining popularity for its efficient video streaming capabilities over the web. You may wish to take advantage of this technology to stream your own set of images, normally rendered locally with opencv / cv2 / PIL over a web server. This is a great choice to view real-time video streams from a browser. The most popular python WebRTC library is aiortc, although note it supports a limited number of codecs. The library comes with useful example code that shows how to use WebRTC transceivers to stream video/audio from a server to the browser with its webcam example. But perhaps you would like to stream your own set of images as a video to the browser, using python to create a WebRTC video stream from images. An example use case is if you want to visually render some visual AI processing in realtime, such as object identification boxes.

Looking to get a head start on your next software interview? Pickup a copy of the best book to prepare: Cracking The Coding Interview!

Streaming from your own images can follow an example such as this OpenAI Gym streamer. The key to streaming your own images is to create your own VideoStreamTrack class whose recv function consumes the images you wish to publish over the WebRTC connection:

from av import VideoFrame

from aiortc import VideoStreamTrack

class ImageRenderingTrack(VideoStreamTrack):

def __init__(self):

super().__init__()

self.queue = asyncio.Queue(10)

def add_image(self, img: humpy.ndarray):

try:

self.queue.put_nowait(img)

except asyncio.queues.QueueFull:

pass

async def add_image_async(self, img: numpy.ndarray):

await self.queue.put(img)

async def recv(self):

img = await self.queue.get()

frame = VideoFrame.from_ndarray(img, format="bgr24")

pts, time_base = await self.next_timestamp()

frame.pts = pts

frame.time_base = time_base

return frame

If your images are being generated by an async function, you can use the add_image_async to wait for the queue to have room before you add an image, good if you control your own event loop. If your images are being generated via a threaded function, you can use the non async add_image function to populate them, although it is not recommended to mix async python event loops and threading. You should create the ImageRenderingTrack object and use dependency injection to pass it to wherever your images are being generated, which will allow you to add the image after it is generated.

You will want to add the ImageRenderingTrack object to the RTC peer connection on your server during signaling, such as with pc.addTrack(video_track).

Hopefully using the ImageRenderingTrack above as your video track in your RTC peer connection, you now know how to create a WebRTC video stream from images.

Elevate your software skills

Ergonomic Mouse |

Custom Keyboard |

SW Architecture |

Clean Code |